Vielleicht stoßen Sie auf einige Webseiten, in denen Zieltrichter oder Konversionstrichter austauschbar verwendet werden. Manchmal wird auch von einem Verkaufstrichter gesprochen. Unabhängig vom Begriff sind sie von unschätzbarem Wert für den Erfolg Ihres Unternehmens. Er verfolgt die Customer Journey von der Lead-Generierung, der Anmeldung bis zum Kauf und der Sicherstellung, dass sie wieder kaufen.

Er ist besonders wichtig, wenn Sie versuchen, nicht-transaktionale Bestrebungen zu messen. Bei E-Commerce-Geschäften ist es einfacher, den Erfolg der Website zu messen, da er an Gewinnen und Verlusten gemessen werden kann.

Bei einer typischen E-Commerce-Website schaut sich der potenzielle Kunde beispielsweise die Produkte im Menü an, klickt auf den gewünschten Artikel, legt ihn in den Warenkorb und tätigt dann den Kauf. Bei einigen Websites ist ein Registrierungsprozess erforderlich, der einen weiteren Schritt im Conversion Funnel darstellt.

Mit diesen Kennzahlen erhalten Sie einen Einblick in die Customer Journey und können Ihre Marketingmaßnahmen anpassen, um die Conversion Rate zu steigern. Das spart Ihnen viel Zeit und Sie holen das Beste aus Ihrer Investition heraus.

Makro- und Mikro-Trichter

Es gibt zwei Hauptwege, wie Sie Ihre Conversion Funnels in Google Analytics einstellen können. Die eine ist der Makro-Ansatz und die andere ist der Mikro-Ansatz.

Wenn Sie Makro sagen, beziehen Sie sich auf die breiteren und verallgemeinerten kanalübergreifenden Ziele, um ein Verständnis für Ihre Kunden zu gewinnen, wie bekannt sie für Ihre Marke sind, wie empfänglich sie für Ihr Angebot sind und wie Sie Ihre Botschaft massieren können, um sie zu erreichen und eine Reaktion auszulösen.

Mit der Mikrostrategie werden Sie bewusster in Ihren Zielen, um spezifische und lokalisierte Trichter vor Ort zu schaffen. Sie wollen also verfolgen, wie Ihre Kunden Ihre Website nutzen, welche Links sie anklicken, wie lange sie auf der Landing Page bleiben, wie viel Zeit sie auf Ihrer Website verbringen oder ob sie zurückgehen.

Zielsetzung

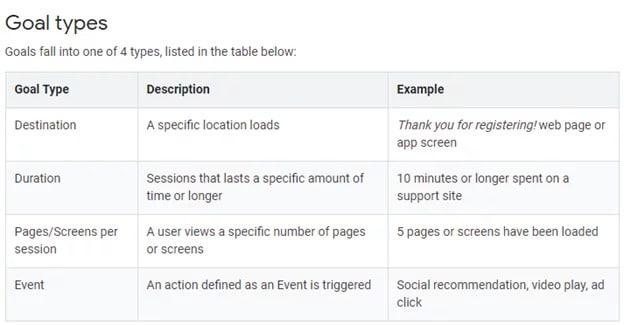

Vor allem müssen Sie Ihre Ziele festlegen. Das Ziel ist das grundlegende Element in Ihrer Conversion Funnel-Strategie. Wenn Sie die falschen Ziele setzen, können Sie vom Weg abkommen und sich dabei verirren. Wenn Sie Ihre Analytics-Ziele auf Ihre Geschäftsziele abstimmen, können Sie die Effektivität Ihrer digitalen Marketingkampagne richtig messen.

Wenn Sie zum Beispiel eine E-Commerce-Website haben, ist das übergeordnete Ziel, dass ein Kunde einen Kauf tätigt. Wenn Sie ein Entwickler von Online-Spielen sind, wollen Sie, dass die Benutzer Ihren Titel herunterladen. Wenn Sie eine Nachrichtenseite sind, kann der Erfolg daran gemessen werden, wie viele Menschen Sie mit Ihren Artikeln erreichen.

Um Ihre Ziele zu bestimmen, müssen Sie die folgenden Fragen beantworten:

- Führt meine Marketingkampagne zu Leads und Verkäufen für mein Unternehmen?

- Wie viel Zeit verbringen die Nutzer auf meiner Website? Wie hoch ist die Absprungrate?

- Sind die Zielschritte für die Nutzer klar, wenn sie sich auf Ihrer Landing Page befinden?

- Erfüllt der Inhalt der Website die gesetzten Ziele?

- Erzeugt Ihr Unternehmen genügend Interesse durch relevanten Traffic?

Wie definiere ich Ziele (Goals) bei Google Analytics?

- Melden Sie sich für Ihr Konto in Google Analytics an. Sie müssen über Administratorrechte verfügen, damit Sie Änderungen vornehmen können.

- Gehen Sie zur Registerkarte „Ziele“ und klicken Sie darauf.

- Geben Sie einen Namen für Ihr Ziel ein und geben Sie dann die URL für Ihr Ziel ein.

- Der standardmäßige Übereinstimmungstyp wird als Exakte Übereinstimmung angezeigt. Lassen Sie ihn eingeschaltet, es sei denn, Sie müssen auch die verschiedenen Varianten Ihrer Ziel-URLs berücksichtigen.

- Geben Sie den Zielwert ein, auch wenn Sie Ihre Website nicht zur Monetarisierung nutzen. Dieser Schritt ermöglicht es Google, die Metriken zu kalibrieren, wie z. B. den Wert pro Besuch oder den Seitenwert.

Wenn Sie fertig sind und Ihre Ziele speichern, fügen Sie zunächst den Trichter hinzu, indem Sie das Kontrollkästchen aktivieren. Anschließend können Sie die Namen oder URLs in das Feld für die Schritte eingeben. Sie können bis zu 20 Trichterschritte haben.

(Hinweis: Unter dem Zieltrichter und neben den Trichterschritten sehen Sie ein Kästchen mit den Worten „Erforderlicher Schritt“. Wenn Sie das Kästchen markieren, bedeutet das, dass der Prozentsatz der Besuche auf Ihrer Trichter-Konversionsrate einen Seitenaufruf des 1. Schritts vor dem Seitenaufruf der Zielseite beinhaltet).

Beschränkungen von Zielen

Google Analytics hat Vorlagenziele, die Sie auswählen können, aber Sie können Ihre Ziele auch anpassen. Wählen Sie einen einfachen und eindeutigen Zielnamen, um Verwechslungen in Zukunft zu vermeiden.

Es gibt jedoch einige Einschränkungen, wenn Sie Ziele einrichten. Hier sind nur einige von ihnen:

- Sie müssen die Ziele zuerst in Ihrem Google Analytics-Konto einrichten, bevor das System Daten sammeln und berichten kann.

- Jede Berichtsansicht hat nur 20 Ziele. Sie müssen die Ziele, die Sie nicht mehr benötigen, überschreiben. Alternativ können Sie auch eine zusätzliche Berichtsansicht erstellen.

- Die Zieldaten funktionieren nicht wie Ihre Standard-Analytics-Daten. Sie könnten über den Prozess verwirrt sein, daher müssen Sie die nicht standardmäßige Datenverarbeitung studieren, insbesondere bei den Funktionen.

- Sie können die Ziele nicht löschen. Sie können jedoch das System auffordern, die Datenerfassung für ein bestimmtes Ziel zu beenden.

- Nachdem Sie Ihre Zielsätze und IDs erstellt haben, bleiben diese bestehen. Sie können jedoch den Zielnamen und den Typ bearbeiten.

- Sie können Ziele nicht rückwirkend verfolgen. Es gibt Tools, wie z. B. Heap Analytics, mit denen Sie dies tun können.

- Es würde sich schwer tun, Multi-Session-Aktionen zu verfolgen. Es ist zuverlässiger, wenn Sie Benutzeraktionen in einer einzelnen Sitzung verfolgen.

Der Konversionstrichter ermöglicht es Ihnen, die Seiten zu erkennen, auf denen Reibung vorhanden ist. Anhand des Trichters wird das Tool sofort melden, dass ein Benutzer die Zielschritte verlassen hat. Bei einem Online-Shop wissen Sie zum Beispiel, wenn der Kunde das Produkt in den Warenkorb gelegt hat, aber kurz vor dem Abschluss des Kaufs stehen geblieben ist.

Dann können Sie beginnen, das Problem zu beheben.

- Es könnte ein technischer Fehler sein, der den Benutzer rauswirft und ihn daran hindert, den Artikel zu kaufen.

- Es könnte eine Frage des Designs sein, bei dem die vielen Pop-ups und Anmeldungen den potenziellen Kunden abschrecken.

- Die Schritte könnten verwirrend sein, was sie dazu zwingt, den Prozess frustriert abzubrechen.

Da Sie mit dem Reporting-Tool sehen können, in welcher Phase des Trichters der Prozess abgebrochen wurde, können Sie dann potenzielle Lösungen entwickeln, um ähnliche Probleme in Zukunft zu vermeiden.

Sie können auch feststellen, welche Zielschritte für viele Benutzer den größten Engpass darstellen. Wenn Sie eine ungewöhnliche Anzahl von Besuchern sehen, die bei einem bestimmten Schritt abgebrochen haben, können Sie die mögliche Störung eingrenzen.

Einrichten des Konversionstrichters für die E-Commerce-Website

Es gibt drei Wege, die Sie bei der Erstellung des Google Analytics-Trichterberichts einschlagen können. Sie können die Auswahl aus Conversions>Ecommerce anklicken. Zunächst müssen Sie die erweiterte E-Commerce-Konversion aktivieren und sicherstellen, dass alle erforderlichen Elemente verfolgt werden.

Shopping Behavior

Damit können Sie die Anzahl der Sitzungen und Schritte in jeder Phase des Kauftrichters überprüfen. Sie können auch sehen, wie viele in der Mitte des Zieltrichters aufgehört und den Prozess komplett abgebrochen haben und wie viele die Schritte abgeschlossen haben. Wo im Schritt sehen Sie einen deutlichen Anstieg der Absprungrate? Der Bericht verfügt auch über eine Visualisierungsfunktion, die einen schnellen Blick auf den Trichter ermöglicht.

Reverse Goal Path

Nicht viele Leute nutzen dieses Tool, weil sie nicht ganz verstehen, was es bewirkt. In Shopping Behavior und Funnel Visualization können Sie die Schritte, die der Kunde im Zieltrichter durchläuft, klar nachvollziehen. Es gibt jedoch Fälle, in denen die Pfade unzusammenhängend sind. Bei Seiten zur Lead-Generierung haben sie zum Beispiel Kontaktformulare, die auf mehreren Seiten erscheinen. Die Reverse Goal Paths helfen dabei, die Lücken zu füllen und ermöglichen es Ihnen, die Bewegungen der Kunden weiterhin zu verfolgen, unabhängig davon, ob sie auf der Landing Page, der Kundenverzeichnisliste oder der Registrierungsseite erscheinen oder nicht. Die einzige Einschränkung ist, dass es abgebrochene Prozesse berücksichtigt. Das Ziel muss abgeschlossen werden, bevor es nachverfolgt werden kann.

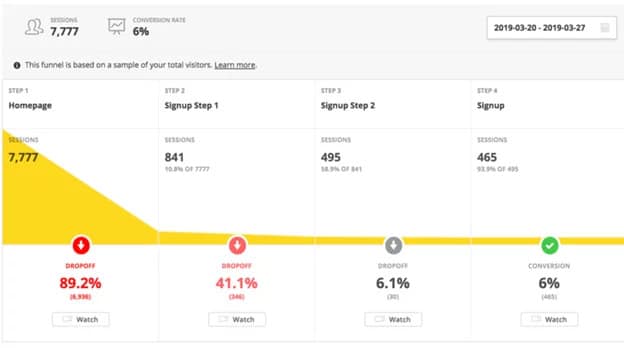

Funnel Visualization

Die Trichter-Visualisierung misst, wie oft die Benutzer diesen bestimmten Schritt besuchen. Sie sollte nicht mit den Seitenaufrufen verwechselt werden. Die Trichtervisualisierung zeigt jeden Abschnitt, der den Prozentsatz der Besucher darstellt, die zum nächsten Schritt bis zur endgültigen Ziel-URL weitergehen. Sie sollte nicht mit der Goal Conversion Rate verwechselt werden, die alle Besuche auf Ihrer Website zählt. Das Tool hilft Ihnen, einen Einblick zu gewinnen, ob die von Ihnen eingerichteten Seiten zu Ihrer Konversionsrate beitragen. Wenn Sie zum Beispiel regelmäßig Blogs veröffentlichen, wissen Sie, wie viele Besucher von Ihren Artikeln auf die Conversion-Seite geleitet werden.

Die Trichter-Visualisierung und das Kaufverhalten geben Ihnen einen Einblick, wo Sie in Ihrem Zieltrichter etwas falsch gemacht haben. Sie sonnen sich nicht in Ihren Erfolgen, denn das ist keine effektive Methode, um zu lernen. Sie ziehen Lehren aus Ihren Misserfolgen.

Einrichten eines Konversionstrichters für eine Nicht-E-Commerce-Site

Es ist schwieriger, diese Art von Conversion Funnel für Ihre nicht-transaktionale Website einzurichten. Es gibt keine Option innerhalb von Google Analytics, die dies ermöglicht. Einige Unternehmen greifen auf Apps von Drittanbietern zurück, während andere das notwendige Know-How besitzen, um Google Analytics zu „hacken“.

Sie können zum Beispiel Google Data Studio verwenden, mit dem Sie die Segmente filtern können, z. B. Nutzer, die von der Landing Page oder einem bestimmten Kanal kommen. Sie können auch benutzerdefinierte Profile von Nutzern segmentieren, die die Trichterschritte abgebrochen haben, so dass Sie versuchen können, sie erneut anzusprechen.

Sie können auch einige Werte und Variablen in den Enhanced Commerce-Trichterschritten ändern, um Ergebnisse zu erhalten, die für Ihre Bedürfnisse relevant sind. Es ist machbar, aber ziemlich kompliziert.

Trichter-Konversionsrate

Die Funnel Conversion Rate verfolgt die Rate, mit der der Lead den Marketingtrichter durchläuft. Mit diesem Tool können Sie die Effektivität Ihrer Marketingbemühungen bei der Erreichung der Ziele messen, während Sie sich dem Ende des Verkaufstrichters nähern. Mithilfe der Funnel Conversion Rate können Sie auch Ihre Website so anpassen, dass sie Ihren Zielen entspricht.

Zur Berechnung der Funnel Conversion Rate können Sie die folgende Formel verwenden:

(Anzahl der Leads, die in die nächste Stufe wechseln ÷ Leads in der Trichterstufe x 100).

Sie können auch die Häufigkeit des Reportings einstellen, je nach Ihren Bedürfnissen.

Selbst wenn Sie eine scheinbar gute Konversionsrate haben, müssen Sie dennoch jeden Trichter überprüfen, um Engpässe zu identifizieren. Überprüfen Sie die Zahlen für jeden Abschnitt des Trichters von oben nach unten. Stellen Sie fest, welcher Kanal nicht gut abschneidet, und dann können Sie Wege finden, ihn zu optimieren, damit der Kanal besser abschneidet. Wenn Sie andere Engpässe finden, gehen Sie auch diese an.

Stellen Sie jedoch sicher, dass Sie zuerst die Optimierung eines Kanals abschließen, bevor Sie zum nächsten übergehen, um eine Überschneidung der Strategien zu vermeiden.

Der US Consumer Electronics Report 2019 hat ergeben, dass die durchschnittliche Konversionsrate vom Besuch zum Kauf branchenübergreifend bei etwa 3 % liegt.

Die Trichter-Conversion-Rate hängt von Ihrer Branche ab. Wie in dem Bericht zitiert, lag die Konversionsrate in der Elektronikbranche bei nur 1,4 %, was ziemlich miserabel ist. Wenn Ihre Konversionsrate zwischen 3 % und 5 % liegt, können Sie sich auf Ihre Marketingstrategie verlassen. Solange Sie nicht unter 2 % fallen, sind Sie gut.

Fazit

Die Conversion Funnels in Google Analytics sind ein nützliches Tool, das Ihrem Unternehmen dabei hilft, Ihre Marketingkampagnen zu kalibrieren, ein besseres Erlebnis für Ihre Kunden zu schaffen und die Engpässe auf Ihrer Website zu beheben. Indem Sie das Problem so früh wie möglich erkennen, können Sie den Kanal sofort optimieren und seine Leistung verbessern. Stellen Sie sicher, dass Sie die Trichter und Ziele in den frühesten Stadien Ihres Implementierungsprozesses identifizieren.